PostgreSQL 9.3 has a new join type! Lateral joins arrived without a lot of fanfare, but they enable some powerful new queries that were previously only tractable with procedural code. In this post, I’ll walk through a conversion funnel analysis that wouldn’t be possible in PostgreSQL 9.2.

What is a LATERAL join?

The best description in the documentation comes at the bottom of the list of FROM clause options:

The LATERAL key word can precede a sub-SELECT FROM item. This allows the sub-SELECT to refer to columns of FROM items that appear before it in the FROM list. (Without LATERAL, each sub-SELECT is evaluated independently and so cannot cross-reference any other FROM item.)

…

When a FROM item contains LATERAL cross-references, evaluation proceeds as follows: for each row of the FROM item providing the cross-referenced column(s), or set of rows of multiple FROM items providing the columns, the LATERAL item is evaluated using that row or row set’s values of the columns. The resulting row(s) are joined as usual with the rows they were computed from. This is repeated for each row or set of rows from the column source table(s).

This is a bit dense. Loosely, it means that a LATERAL join is like a SQL foreach loop, in which PostgreSQL will iterate over each row in a result set and evaluate a subquery using that row as a parameter.

What can we do with this?

Consider a table of click events with the following schema:

Each event is associated with a user and has an ID, a timestamp, and a JSON blob with the event’s properties. At Heap, these properties might include the DOM hierarchy of a click, the window title, the session referrer, and so forth.

Let’s say we want to optimize our landing page to increase signups. The first step is to figure out where we’re losing users in our conversion funnel.

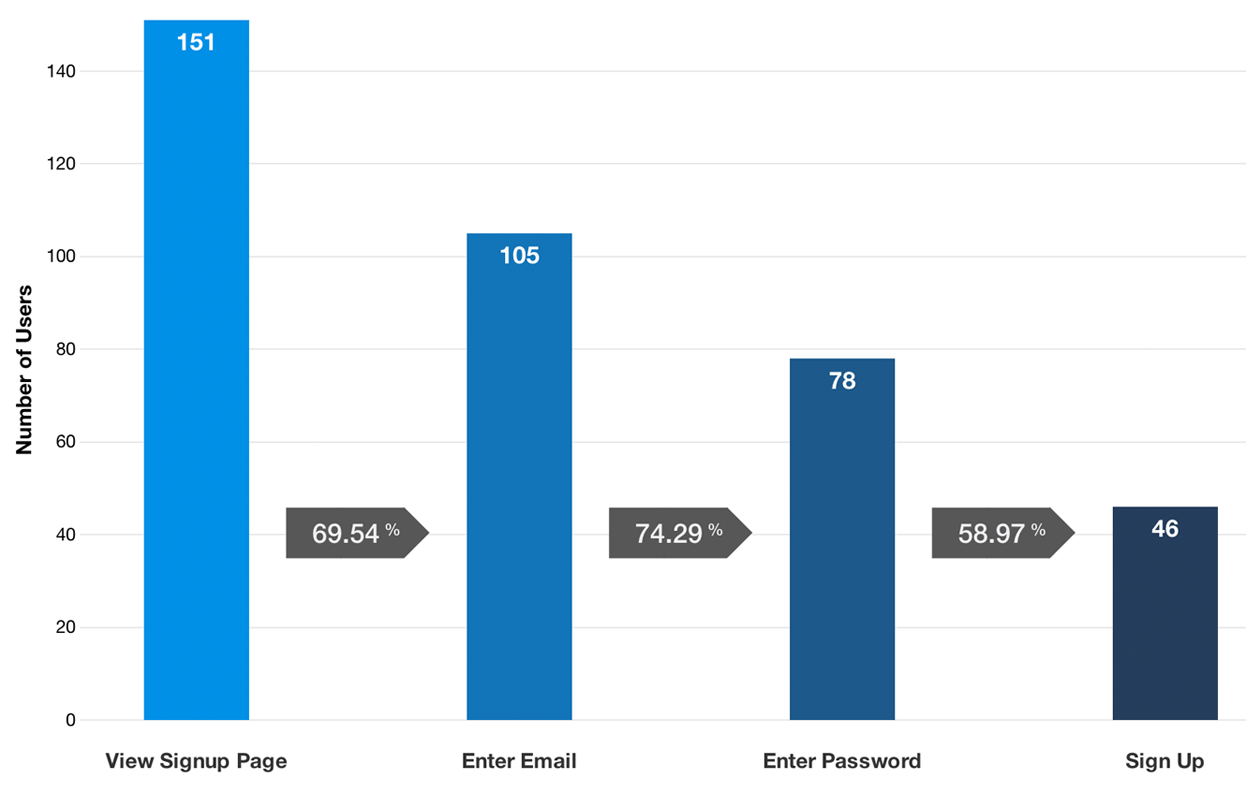

An example conversion funnel between four steps in a signup flow.

We’ll assume that we’ve instrumented our frontend to log events along this flow and that all of the data lives in the event table specified above. As an initial question, let’s figure out how many people view our homepage and what percentage of them enter a credit card within two weeks of that initial homepage view. If we were using an older version of PostgreSQL, we might write some custom functions in PL/pgSQL, PostgreSQL’s builtin procedural language. But, in 9.3, we can use a lateral join to compute this in one efficient query, with no extensions or PL/pgSQL.

Nobody likes 30-line SQL queries, so let’s break this down into pieces. The first chunk of this is vanilla SQL:

That is, get the initial time each user did a view_homepage event. Then our lateral join allows us to iterate over each resulting row and perform a parametrized version of the next subquery. This is equivalent to taking the query below and running it for each resulting row:

I.e., for each user, get the first time he or she performed the enter_credit_card event within two weeks of view_homepage_time. Because this is a lateral join, our subquery can make reference to the view_homepage_time results from the previous subquery. Otherwise, the subqueries would be evaluated independently and there would be no way to access results from one when evaluating the other.

Then we wrap the whole thing in a select, which returns something like this:

Because this is a LEFT JOIN, the query still produces result rows for users with no matching enter_credit_card event, as long as there is a view_homepage event. If we aggregate over the numerical columns, we get a tidy summary of this conversion funnel:

… which produces:

We can add intermediate steps to this funnel with more lateral joins to evaluate which portions of our flow we should focus on improving. Let’s add a use_demo step between viewing the homepage and entering a credit card.

This yields:

This gives us the three-step conversion funnel from viewing the homepage to using the demo within a week to entering the credit card within a week of that. From here, the expressive power of PostgreSQL allows us to drill down on these results and thoroughly analyze the performance of our website. We might follow up with:

- Does using the demo increase the likelihood of a signup?

- Do users who discover our homepage via an ad convert with the same likelihood as users from other sources?

- How do these conversion rates change over time or with different A/B test variants?

The answers to these questions apply directly to product changes and can be determined in PostgreSQL now that it supports lateral joins.

Without lateral joins, we would need to resort to PL/pgSQL to do this analysis. Or, if our data set were small, we could get away with complex, inefficient queries. In an exploratory data science use case, you might just pull your data out of PostgreSQL and analyze it with your scripting language of choice. But there is considerable power in being able to express these questions in SQL, especially if you’re wrapping it all in an approachable UI and exposing the functionality to nontechnical users.

Note that these queries can be tuned so as to be very efficient. In this example, if we create a btree index on (user_id, (data->>’type’), time), we can evaluate each funnel step for each user with a single indexed lookup. If you’re using SSDs, on which seeks are cheap, this might be good enough. If not, you might need to schematize your data a bit differently, but I’ll leave the details of that for another post.

Have a favorite new PostgreSQL feature or a neat lateral join use case?

Interested in building systems that make powerful features easy to use? Shoot us a note at

[1] Or we can use Heap, which captures everything for us as soon as we install it! No need to write any logging code, and no risk of forgetting to log something that you’ll want to analyze later.

[2] Note that adding additional steps to a conversion funnel would be particularly easy if we were using product analytics like Heap, since we would already have the relevant data.

[3] The number of users with enter_credit_card events is lower in this query than in the previous one, as this query only returns enter_credit_card events for users who do so after doing the use_demo event, and 17 of the users signed up without using the demo.

by

by

No comments:

Post a Comment

It’s all about friendly conversation here at small review :) I’d love to be hear your thoughts!

Be sure to check back again because I do make every effort to reply to your comments here.